| Author | John Libonatti-Roche |

| Date | 10th November 2023 |

Executive Summary

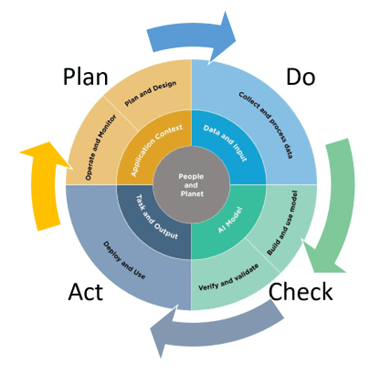

The NIST AI Risk Management Framework (NISTAIRMF 1.0) is a voluntary approach to AI Risk Management that neatly aligns with the Plan-Do-Check-Act model and with standard risk management techniques such as those provided by ISO-31000, ISO-27001 or Prince2.

The framework acknowledges the challenging trade-offs between organisational needs, AI system trustworthiness, accuracy and technical limitations in the context of their societal impacts and the regulatory controls that govern data subjects’ privacy.

It focuses attention on 4 domains, the NIST Core functions, to achieve its purpose:

There is nothing new in the application of a cycle of risk identification-treatment-monitoring to risks but its application to AI is both new and hugely important. In doing so NIST suggests that organisations developing, deploying or using Artificial Intelligence tools have a mature risk management process in place and this bulletin provides an overview how NIST proposes that this is achieved.

Overview

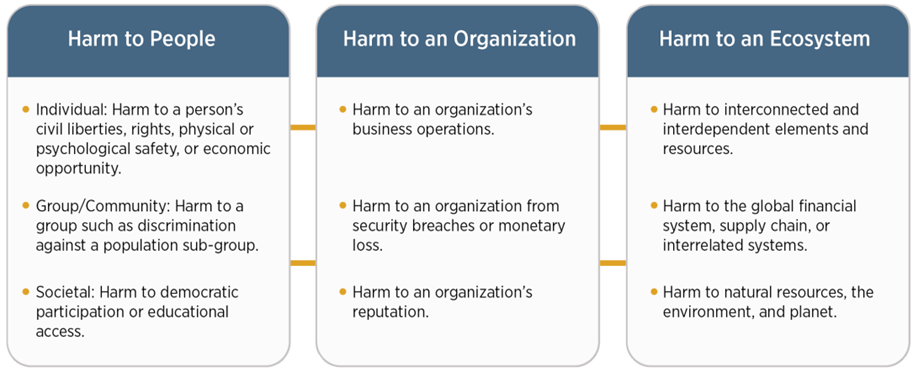

NIST through its NISTAIRMF identifies the following areas of potential harm (risk):

In response, the NIST Risk framework identifies a set of critical characteristics for AI System that, if delivered appropriately, will reduce the likelihood and impact of those negative consequences. Those characteristics are:

- Valid and reliable

- Safe

- Secure and resilient

- Accountable and transparent

- Explainable and interpretable

- Privacy-enhanced and

- Fair with harmful bias managed

- Accountable and Transparent

NIST has identified an excellent set of characteristics of trustworthy AI system which it suggests can be delivered within four domains (referred to as NIST functions):

- Govern

- Map

- Measure

- Manage

Govern Function

Accountably is at its core as this function proposes the implementation of an organisation-wide culture of governance, risk management and training for those involved in the supervision, design, development, deployment, evaluation, acquisition or use of AI systems. It has that initiative in common with every successful risk management process all of which also connect the technical aspects of (AI) system design, development and use with organisational values.

Like ISO-27001, NISTAIRMF also suggests the establishment of policies, procedures and processes to assess potential risks whilst promoting the idea that the competencies of the individuals involved in acquiring, training, testing, deploying, and monitoring those systems should be adequate for the full product lifecycle are critical to success.

The Govern function also strongly emphasises the need for a diverse team of actors in this process and the use of key principles (called categories) to establish:

- Appropriate Policies and Procedures

- Organisational Accountability

- Appropriate Competence through training

- Decision-making using a diverse team

- Good policies, procedures and processes to capture benefits whilst reducing adverse risks and aligning with organisational values

Map Function

The MAP function establishes the context – a mandatory requirement of ISO-27001 – to frame risks related to an AI system function with the intent of:

- Improving the organisational capacity for understanding contexts.

- Checking organisational assumptions about context of use.

- Recognising when systems are not functioning within their intended context.

- Identifying positive and beneficial uses of their existing AI systems.

- Improving understanding of limitations in AI and ML processes.

- Identifying real-world constraints that may lead to negative impacts.

- Identifying known, foreseeable negative impacts related to intended use of AI systems.

- Anticipating risks of the use of AI systems beyond intended use.

Measure Function

The MEASURE function asks that:

- Appropriate methods and metrics are identified and applied.

- AI systems are evaluated for trustworthy characteristics.

- Mechanisms for tracking identified AI risks over time are in place.

- Feedback about efficacy of measurement is gathered and assessed.

Trustworthiness, is a key measure, which as explained earlier requires (at least) that AI systems be valid and reliable, safe, secure and resilient, accountable and transparent, explainable and interpretable, privacy-enhanced, and fair with harmful bias managed.

It is impossible to achieve that level of ‘trustworthiness’ without rigorous software testing, performance assessment and independent review so that is also at the heart of this function.

Then, where ‘trustworthiness’ trade-offs arise, measurement can provide a traceable basis to inform management decisions. Possible management responses that area identified include recalibration, impact mitigation, or removal of the system from design, development, production, or use, as well as a range of compensating, detective, deterrent, directive, and recovery controls.

Manage Function

This function puts plans for prioritising risk, regular monitoring and implementation of improvement in place resulting in an enhanced capacity to manage the risks of deployed AI system as follows:

- AI risks based on assessments and other analytical output from the MAP and MEASURE functions are prioritised, responded to, and managed.

- Strategies to maximise AI benefits and minimise negative impacts are planned, prepared, implemented, documented, and informed by input from relevant AI actors.

- AI risks and benefits from third-party entities are managed.

- Risk treatments, including response and recovery, and communication plans for the identified and measured AI risks, are documented and monitored regularly.

Privacy, PETs and trade-offs

Privacy requires application of values such as

- Anonymity

- Confidentiality and

- Control over the integrity and availability of this data

AI system design, development and deployment should be guided by these characteristic but, certainly in Europe, all systems are regulated to ensure these rights are enforced.

Clearly trade-offs will exist where the technical features of an AI system promotes or reduces privacy and where privacy requirements may impact the value of the AI system. Examples of this include where inference identifies individuals or previously private information about individuals is attributed to them or where data sparsity, perhaps as a result of regulations or privacy-enhancing techniques result in a loss in accuracy. Ironically, the latter may impact the fairness of the system outputs.

Despite these problems or trade-offs, opportunities exist to develop privacy-enhancing technologies (“PETs”) for AI or use of data minimising methods such as pseudonymisation, anonymisation and aggregation for model outputs that would result in privacy-enhanced AI systems without loss of organisational benefits.

Conclusion

Harnessing of AI Systems capabilities, optimising the benefits whilst reducing the negative impact is a hugely attractive proposition. The NIST AI Risk Framework is a voluntary framework to deliver this in an accountable, ethical, business savvy way by

- Focusing on what is important to the business, aligned with your societal responsibilities.

- Establishing an internal organisation accountable for delivering the full lifecycle AI Systems.

- Involving those with diverse skills and perspectives in that organisation.

- Focusing on delivering trustworthy systems using the experiences and toolsets of those who have delivered other important initiatives using global gold standards – like ISO-27001 (in the InfoSec arena).

- Testing, testing, testing (repeat) …

- Learning from the delivery and management of other critical systems in, for example, the air navigation industry.

- Using NISTAIRMF as a guide towards achieving those goals.

- Seeing regulatory compliance as a measure for achieving high-quality systems that provide your business with a USP with consequent benefits for global reach and sales.

Get in touch

Get in touch with us here if you would like to discuss any of this further

Leave a Reply